I recently came across a scenario where we wanted to configure a folder in IIS to be visible for directory browsing so that accessing the log files was easier for development as they were on a server in a different domain (a DMZ).

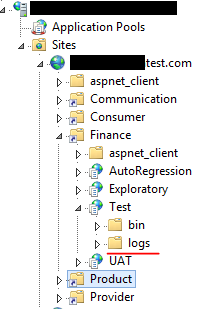

The log files are (currently) being created in a separate Logs folder within the site:

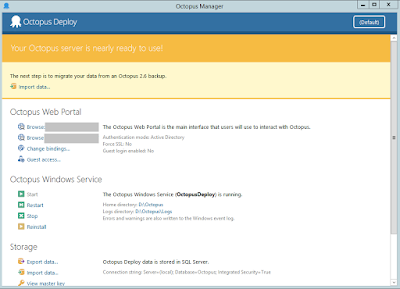

As the deployment is completely controlled by Octopus Deploy we wanted to set the logs folder to be accessible so that the files could be viewed.

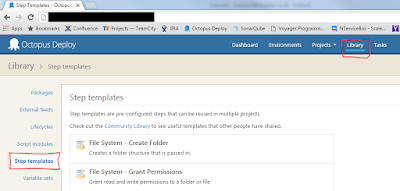

As Octopus Deploy allows you to import script templates (Powershell scripts) and there is a

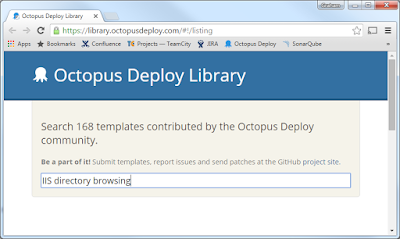

community library where scripts written by others exist my first stop was to look there to see if anything existed for what I wanted to do.

Sadly the script library couldn't help (this time):

So it was time to get my hands dirty with a little bit of PowerShell.

So the first step was to enable directory browsing in IIS. After Googling I found lots of pages that suggested running AppCmd.exe to perform the task but I was hoping for something a bit more elegant than that.

The next step was to add a check (using Test-Path) to be sure that the path existed then replace the hard coded path with a variable and I had my script:

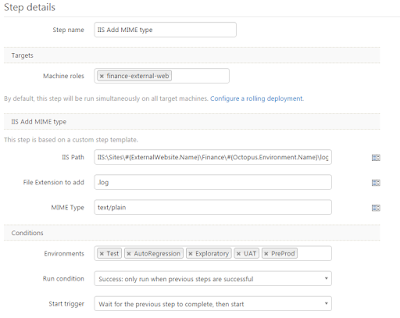

As the NServiceBus log files are a .log extension a new MIME extension needed to be added so that they could be served up by the browser.

Google also helped me here and I came across a blog by

Seth Larson which helped here:

It needed a slight tweak to set the directory and it also returned an error if the type already existed. I also added some variables (to help me with Octopus) and my script was:

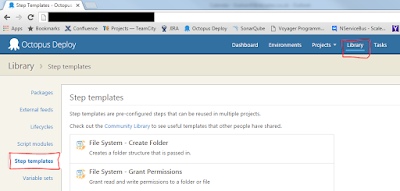

The scripts needed to be added to Octopus so that they could be used, this is a relatively simple process (but may require Admin permissions - I'm not 100% sure):

Login to Octopus, select Library and then Step Templates:

Select Add step template in the top corner:

Then select Powershell from the list of options

On the Step paste in the Powershell script:

On the Parameters Tab I created two new parameters. The parameters mean that they can be configured for each project that uses them simply in Octopus.

IisPath:

EnableDirectoryBrowsing:

This process needed to be repeated for the 'Adding MIME type' script:

For this I added three parameters:

Now that the steps were added it was time to use them in our projects.

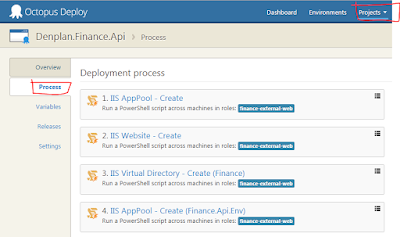

Select the project and select Process on the left hand side:

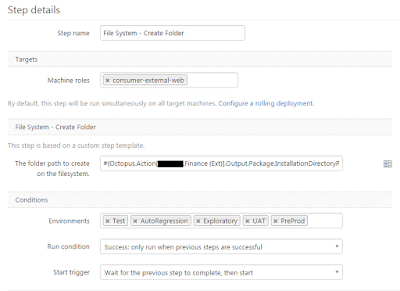

At the bottom of the steps select 'Add step', Octopus will automatically show the parameters that were configured in the step template. For the Directory browsing there were two parameters:

- IIS Path

- IIS:\Sites\#{ExternalWebsite.Name}\Finance\#{Octopus.Environment.Name}\Logs

- Enable directory browsing

The IIS path uses some internal variables that we have configured to ensure that it uses the correct setting as the server changes during the CD pipeline.

I set the environments so that this would not run on our Production environment (and arguably I shouldn't have set it to run on PreProd), this is because we don't want the logs to be visible to everyone in live!

Adding the step for the MIME type was almost the same:

The only step that needed to be added was to create the logs folder as it is not created as part of our deployment:

The order of the steps was:

When I ran this I encountered a couple of problems:

Ensure that the IIS path is correct (especially when copying from one project to another).

I originally left the name Finance in it when I set it up on Product and didn't error but also didn't work!

When adding the MIME type it checks the Web.config file of the project to be sure that it is valid.

TeamCity returned the error when adding the MIME type:

Error: The 'autoDetect' attribute is invalid. Enum must be one of false, true, Unspecified

When I looked at the Web.Config file was set to:

<proxy autoDetect="True" />

Changing the setting to 'true' with a lowercase T resolved the issue.